Digital Ordering Kiosk: Redesign

Project Overview

A complete redesign of the on-premise digital ordering experience. Our objective was to refine the experience to reduce time on task and non-critical errors for the consumer, which will result in a higher conversion rate for our clients.

My role during this project was both Lead UX Researcher and mentor to the UI Designer in regard to UX best practices.

My Contributions

-

Observational Studies (including competitors)

-

Contextual Inquiry

-

Heuristic Evaluation

-

Ideation sessions

-

UX Design Refinement

-

Low-fi moderated testing

-

High-fi moderated testing

- My Process -

Observational Studies:

Goal

Establish a thorough understanding of both Flipdish and

competitor kiosk ordering experiences by observing how users

interact with kiosks in their natural environmental context. We aim

to identify how real-world factors influence behavioural patterns.

Approach

-

Naturalistic observation

-

2 clients & 4 competitors

-

2-3 hour sessions during lunch hours

-

No interaction/interference with users

-

Sessions recorded via written notes

Outcome

From this study, we were able to gain an understanding of pain points

that had otherwise gone unnoticed. This exercise combined with the

heuristic evaluation gave a well-rounded look at what needed to

be solved in the redesign. Additionally, this study helped form personas

and a generalised journey map that drove design decisions during

low-fidelity ideation.

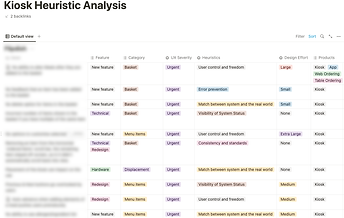

Heuristic Evaluation:

Ideation Sessions:

Next, I lead a series of ideation sessions with our design team

to create a low-fidelity solution based on the previously

identified design principles.

Approach

One-hour sessions in Miro where the team would spend a few

minutes workshopping ideas. Afterwards, the team would

discuss individual approaches to determine the best solution.

Outcome

A low-fidelity solution to be refined by the UI Designer

and turned into a prototype for low-fidelity testing.

Low-Fidelity Moderated Usability Testing:

High-Fidelity Moderated Usability Testing:

Redesign Outcome

-

Significant reduction of non-critical error rate

-

Decrease in time on task between low-fidelity and high-fidelity test

-

Improved clarity on CTA naming conventions

-

Increase in test satisfaction scores from low-fidelity to high-fidelity test

Next Steps

-

Final refinements to be made by UI Designer based on recommendations gathered from research

-

After go-live, analytics will track improvements through Amplitude

Approach

In between observational studies, I performed an in-depth heuristic analysis, using Jakob Nielsen's 10 usability heuristics. During this phase, I also took the opportunity to audit the accessibility of our current approach and how we could prioritise these items in the redesign.

Outcome

A comprehensive list of known usability issues within the current ordering experience, as well as a detailed list of items to include in the redesign.

Study Goal

Validate design decisions made in low-fidelity design.

We aim to understand:

-

User's satisfaction with the interface

-

User's ability to discover menu items efficiently

-

User's ability to add items to their basket and checkout without errors (critical & non-critical)

Approach

In-person moderated study

12 participants (6 male, 6 female)

Recruited through Respondent.ie

Metrics

-

Successful task completion: % of tasks that test participants complete correctly

-

Critical errors: Errors that block the user from successfully completing a task

-

Non-critical errors: Error that does not affect the ability to successfully complete a task but does impose a lag in the experience

-

Task Satisfaction: How participants felt about the task

-

Test Satisfaction: How participants felt about the overall experience

-

SUPR-Q (Standardised User Experience Percentile Rank Questionnaire)

Outcome & Next Steps

A set list of recommendations/design changes to make before moving into high fidelity.

After design changes were made from low fidelity testing and high fidelity design were complete, I ran a final round of usability testing. This test was run using the same approach so that we could track improvements using the metrics below.

Study Goal

Validate changes from the previous study as well as design decisions made in the high-fidelity design.

We aim to understand:

-

User's satisfaction with the interface

-

User's ability to discover menu items efficiently

-

User's ability to add items to their basket and checkout without errors (critical & non-critical)

Approach

In-person moderated study

12 participants (6 male, 6 female)

Recruited through Respondent.ie

Metrics

-

Successful task completion: % of tasks that test participants complete correctly

-

Critical errors: Errors that block the user from successfully completing a task

-

Non-critical errors: Error that does not affect the ability to successfully complete a task but does impose a lag in the experience

-

Task Satisfaction: How participants felt about the task

-

Test Satisfaction: How participants felt about the overall experience

-

SUPR-Q (Standardised User Experience Percentile Rank Questionnaire)

Design Refinements:

Between each stage of testing, I was actively working with the UI Designer and Product Manager to refine the experience of the designs based on my formal recommendations gathered during the study.